Session X : Concurrency

In this Session X : Concurrency, there are 5 subtopics:

- Introduction

- Introduction to Subprogram-Level Concurrency

- Semaphores

- Monitors

Introduction

Concurrency in software execution can occur at four different levels: instruction level (executing two or more machine instructions simultaneously), statement level (executing two or more high-level language statements simultaneously), unit level (executing two or more subprogram units simultaneously), and program level (executing two or more programs simultaneously).

Introduction to Subprogram-Level Concurrency

A task is a unit of a program, similar to a subprogram, that can be in concurrent execution with other units of the same program. Each task in a program can support one thread of control. Tasks are sometimes called processes. In some languages, for example Java and C#, certain methods serve as tasks. Such methods are executed in objects called threads. Tasks usually work together and may be implicitly started, when a program unit starts the execution of a task, it is not necessarily suspended, and when a task’s execution is completed, control may not return to the caller.

There are two general categories of tasks:

- Heavyweight tasks execute in their own address space

- Lightweight tasks all run in the same address space – more efficient

A task is disjoint if it does not communicate with or affect the execution of any other task in the program in any way

Synchronization is a mechanism that controls the order in which tasks execute. Two kinds of synchronization are required when tasks share data: cooperation and competition. Cooperation synchronization is required between task A and task B when task A must wait for task B to complete some specific activity before task A can begin or continue its execution. Competition synchronization is required between two tasks when both require the use of some resource that cannot be simultaneously used.

A run-time system program called a scheduler manages the sharing of processors among the tasks, If there were never any interruptions and tasks all had the same priority, the scheduler could simply give each task a time slice, such as 0.1 second, and when a task’s turn came, the scheduler could let it execute on a processor for that amount of time.

Task Execution States :

- New – created but not yet started

- Ready – ready to run but not currently running (no available processor)

- Running

- Blocked – has been running, but cannot now continue (usually waiting for some event to occur)

- Dead – no longer active in any sense

Semaphores

A semaphore is a simple mechanism that can be used to provide synchronization of tasks. Although semaphores are an early approach to providing synchronization, they are still used, both in contemporary languages and in library-based concurrency support systems. Semaphores can be used to implement guards on the code that accesses shared data structures and to provide both competition and cooperation synchronization. Semaphores have only two operations, wait and release .

Cooperation Synchronization with Semaphores

For Example: A shared buffer

The buffer is implemented as an ADT with the operations DEPOSIT and FETCH as the only ways to access the buffer, it uses two semaphores for cooperation: emptyspots and fullspots. The semaphore counters are used to store the numbers of empty spots and full spots in the buffer. DEPOSIT must first check emptyspots to see if there is room in the buffer. If there is room, the counter of emptyspots is decremented and the value is inserted and if there is no room, the caller is stored in the queue of emptyspots

When DEPOSIT is finished, it must increment the counter of fullspots , FETCH must first check fullspots to see if there is a value. If there is a full spot, the counter of fullspots is decremented and the value is removed. If there are no values in the buffer, the caller must be placed in the queue of fullspots, when FETCH is finished, it increments the counter of emptyspots

The operations of FETCH and DEPOSIT on the semaphores are accomplished through two semaphore operations named wait and release

Competition Synchronization

This semaphore need not count anything but can simply indicate with its counter whether the buffer is currently being used. The wait statement allows the access only if the semaphore’s counter has the value 1, which indicates that the shared buffer is not currently being accessed. If the semaphore’s counter has a value of 0, there is a current access taking place, and the task is placed in the queue of the semaphore. Notice that the semaphore’s counter must be initialized to 1. The queues of semaphores must always be initialized to empty before use of the queue can begin. A semaphore that requires only a binary-valued counter, like the one used to provide competition synchronization in the following example, is called a binary semaphore.

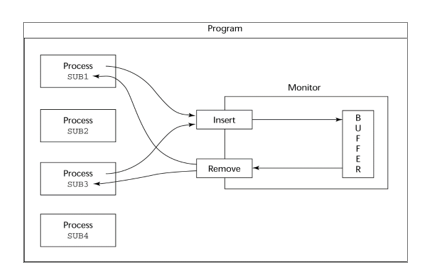

Monitors

One solution to some of the problems of semaphores in a concurrent environment is to encapsulate shared data structures with their operations and hide their representations—that is, to make shared data structures abstract data types with some special restrictions. This solution can provide competition synchronization without semaphores by transferring responsibility for synchronization to the run-time system.

Competition Synchronization

One of the most important features of monitors is that shared data is resident in the monitor rather than in any of the client units. The programmer does not synchronize mutually exclusive access to shared data through the use of semaphores or other mechanisms. Because the access mechanisms are part of the monitor, implementation of a monitor can be made to guarantee synchronized access by allowing only one access at a time. Calls to monitor procedures are implicitly blocked and stored in a queue if the monitor is busy at the time of the call.

Cooperation Synchronization

Although mutually exclusive access to shared data is intrinsic with a monitor, cooperation between processes is still the task of the programmer. In particular, the programmer must guarantee that a shared buffer does not experience underflow or overflow. Different languages provide different ways of programming cooperation synchronization, all of which are related to semaphores.

No Comments »

RSS feed for comments on this post. TrackBack URL