Session V : Expression and Asignment Statements

Session V :

Expression and Asignment Statements

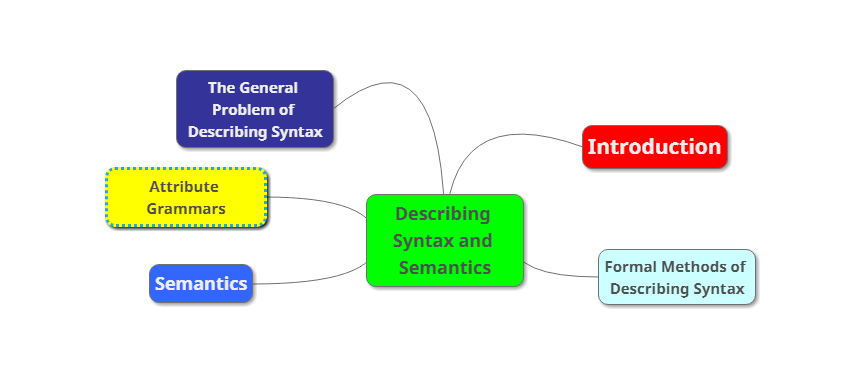

In this session V : Expression and Asignment Statements Types I , there are 8 sub topics :

- Introduction

- Arithmetic Expressions

- Overloaded Operators

- Type Conversions

- Relational and Boolean Expressions

- Short-Circuit Evaluation

- Assignment Statements

- Mixed-Mode Assignment

Introduction

Expressions are the fundamental means of specifying computations in a programming language. It is crucial for a programmer to understand both the syntax and semantics of expressions of the language being used. To understand expression evaluation, it is necessary to be familiar with the orders of operator and operand evaluation. The operator evaluation order of expressions is dictated by the associativity and precedence rules of the language. Although the value of an expression sometimes depends on it, the order of operand evaluation in expressions is often unstated by language designers. This allows implementors to choose the order, which leads to the possibility of programs producing different results in different implementations. Other issues in expression semantics are type mismatches, coercions, and short-circuit evaluation. The essence of the imperative programming languages is the dominant role of assignment statements. The purpose of these statements is to cause the side effect of changing the values of variables, or the state, of the program. So an integral part of all imperative languages is the concept of variables whose values change during program execution. Functional languages use variables of a different sort, such as the parameters of functions. These languages also have declaration statements that bind values to names. These declarations are similar to assignment statements, but do not have side effects.

Arithmetic Expressions

Automatic evaluation of arithmetic expressions similar to those found in mathematics, science, and engineering was one of the primary goals of the first high-level programming languages. Most of the characteristics of arithmetic expressions in programming languages were inherited from conventions that had evolved in mathematics. In programming languages, arithmetic expressions consist of operators, operands, parentheses, and function calls. An operator can be unary, meaning it has a single operand, binary, meaning it has two operands, or ternary, meaning it has three operands. In most programming languages, binary operators are infix, which means they appear between their operands. One exception is Perl, which has some operators that are prefix, which means they precede their operands. The purpose of an arithmetic expression is to specify an arithmetic computation. An implementation of such a computation must cause two actions: fetching the operands, usually from memory, and executing arithmetic operations on those operands.

- A unary operator has one operand

- A binary operator has two operands

- A ternary operator has three operands

The operator associativity rules for expression evaluation define the order in which adjacent operators with the same precedence level are evaluated

Typical associativity rules

- Left to right, except **, which is right to left

- Sometimes unary operators associate right to left (e.g., in FORTRAN)

Precedence and associativity rules can be overriden with parentheses

Operand evaluation order

1.Variables: fetch the value from memory

2.Constants: sometimes a fetch from memory; sometimes the constant is in the machine language instruction

3.Parenthesized expressions: evaluate all operands and operators first

4.The most interesting case is when an operand is a function call

Overloaded Operators

Arithmetic operators are often used for more than one purpose. For example, + usually is used to specify integer addition and floating-point addition. Some languages—Java, for example—also use it for string catenation. This multiple use of an operator is called operator overloading and is generally thought to be acceptable, as long as neither readability nor reliability suffers.

Here are some potential troubles :

–Loss of compiler error detection (omission of an operand should be a detectable error)

–Some loss of readability

On the other hand, user-defined overloading can be harmful to readability. For one thing, nothing prevents a user from defining + to mean multiplication. Furthermore, seeing an * operator in a program, the reader must find both the types of the operands and the definition of the operator to determine its meaning. Any or all of these definitions could be in other files. Another consideration is the process of building a software system from modules created by different groups. If the different groups overloaded the same operators in different ways, these differences would obviously need to be eliminated before putting the system together. C++ has a few operators that cannot be overloaded. Among these are the class or structure member operator (.) and the scope resolution operator (::). Interestingly, operator overloading was one of the C++ features that was not copied into Java. However, it did reappear in C#.

Type Conversions

Type conversions are either narrowing or widening. A narrowing conversion converts a value to a type that cannot store even approximations of all of the values of the original type. For example, converting a double to a float in Java is a narrowing conversion, because the range of double is much larger than that of float. A widening conversion converts a value to a type that can include at least approximations of all of the values of the original type. For example, converting an int to a float in Java is a widening conversion. Widening conversions are nearly always safe, meaning that the magnitude of the converted value is maintained.

Relational and Boolean Expressions

A relational operator is an operator that compares the values of its two operands. A relational expression has two operands and one relational operator. The value of a relational expression is Boolean, except when Boolean is not a type included in the language. The relational operators are often overloaded for a variety of types. The operation that determines the truth or falsehood of a relational expression depends on the operand types. It can be simple, as for integer operands, or complex, as for character string operands. Typically, the types of the operands that can be used for relational operators are numeric types, strings, and ordinal types. The syntax of the relational operators for equality and inequality differs among some programming languages. For example, for inequality, the C-based languages use !=, Ada uses /=, Lua uses ~=, Fortran 95+ uses .NE. or <>, and ML and F# use <>. JavaScript and PHP have two additional relational operators, === and !==. These are similar to their relatives, == and !=, but prevent their operands from being coerced. Boolean expressions consist of Boolean variables, Boolean constants, relational expressions, and Boolean operators. The operators usually include those for the AND, OR, and NOT operations, and sometimes for exclusive OR and equivalence. Boolean operators usually take only Boolean operands (Boolean variables, Boolean literals, or relational expressions) and produce Boolean values.

Short-Circuit Evaluation

A short-circuit evaluation of an expression is one in which the result is determined without evaluating all of the operands and/or operators. If evaluation is not short-circuit, both relational expressions in the Boolean expression of the while statement are evaluated, regardless of the value of the first. Thus, if key is not in list, the program will terminate with a subscript out-of-range exception. The same iteration that has index == listlen will reference list[listlen], which causes the indexing error because list is declared to have listlen-1 as an upper-bound subscript value. If a language provides short-circuit evaluation of Boolean expressions and it is used, this is not a problem. In the preceding example, a short-circuit evaluation scheme would evaluate the first operand of the AND operator, but it would skip the second operand if the first operand is false. In the C-based languages, the usual AND and OR operators, && and ||, respectively, are short-circuit. However, these languages also have bitwise AND and OR operators, & and |, respectively, that can be used on Boolean-valued operands and are not short-circuit. Of course, the bitwise operators are only equivalent to the usual Boolean operators if all operands are restricted to being either 0 (for false) or 1 (for true). All of the logical operators of Ruby, Perl, ML, F#, and Python are shortcircuit evaluated. The inclusion of both short-circuit and ordinary operators in Ada is clearly the best design, because it provides the programmer the flexibility of choosing short-circuit evaluation for any Boolean expression for which it is appropriate.

Assignment Statements

Nearly all programming languages currently being used use the equal sign for the assignment operator. All of these must use something different from an equal sign for the equality relational operator to avoid confusion with their assignment operator. ALGOL 60 pioneered the use of := as the assignment operator, which avoids the confusion of assignment with equality. Ada also uses this assignment operator. The design choices of how assignments are used in a language have varied widely. In some languages, such as Fortran and Ada, an assignment can appear only as a stand-alone statement, and the destination is restricted to a single variable. There are, however, many alternatives. A compound assignment operator is a shorthand method of specifying a commonly needed form of assignment. The form of assignment that can be abbreviated with this technique has the destination variable also appearing as the first operand in the expression on the right side.

Mixed-Mode Assignment

Frequently, assignment statements also are mixed mode. Fortran, C, C++, and Perl use coercion rules for mixed-mode assignment that are similar to those they use for mixed-mode expressions; that is, many of the possible type mixes are legal, with coercion freely applied. In a clear departure from C++, Java and C# allow mixed-mode assignment only if the required coercion is widening.8 So, an int value can be assigned to a float variable, but not vice versa. Disallowing half of the possible mixedmode assignments is a simple but effective way to increase the reliability of Java and C#, relative to C and C++.

Read more