Session III : Describing Syntax and Semantics

Session III : Describing Syntax and Semantics

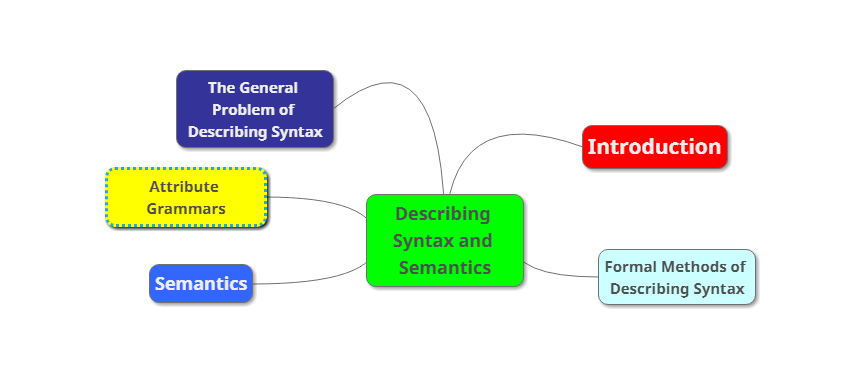

In this session III : Describing Syntax and Semantics, there are 5 sub topics :

- Introduction

- The General Problem of Describing Syntax

- Formal Methods of Describing Syntax

- Attribute Grammars

- Semantics

Introduction

The task of providing a concise yet understandable description of a programming language is difficult but essential to the language’s success. ALGOL 60 and ALGOL 68 were first presented using concise formal descriptions; in both cases, however, the descriptions were not easily understandable, partly because each used a new notation. The levels of acceptance of both languages suffered as a result. On the other hand, some languages have suffered the problem of having many slightly different dialects, a result of a simple but informal and

imprecise definition. One of the problems in describing a language is the diversity of the people

who must understand the description. Among these are initial evaluators, implementors, and users. Most new programming languages are subjected to a period of scrutiny by potential users, often people within the organization that employs the language’s designer, before their designs are completed. These are

the initial evaluators. The success of this feedback cycle depends heavily on the clarity of the description.

These are the simple explanation of syntax, and semantics:

- Syntax: the form or structure of the expressions, statements, and program units

- Semantics: the meaning of the expressions, statements, and program units

- Syntax and semantics provide a language’s definition

The General Problem of Describing Syntax

A language, whether natural (such as English) or artificial (such as Java), is a set of strings of characters from some alphabet. The strings of a language are called sentences or statements. The syntax rules of a language specify which strings of characters from the language’s alphabet are in the language. English, for example, has a large and complex collection of rules for specifying the syntax of its sentences. By comparison, even the largest and most complex programming languages are syntactically very simple. Formal descriptions of the syntax of programming languages, for simplicity’s sake, often do not include descriptions of the lowest-level syntactic units. These small units are called lexemes. The description of lexemes can be given by a lexical specification, which is usually separate from the syntactic description of the language. The lexemes of a programming language include its numeric literals, operators, and special words, among others. One can think of programs as strings of lexemes rather than of characters. Lexemes are partitioned into groups—for example, the names of variables, methods, classes, and so forth in a programming language form a group called identifiers. Each lexeme group is represented by a name, or token. So, a token of a language is a category of its lexemes. For example, an identifier is a token that can have lexemes, or instances, such as sum and total. In some cases, a token has only a single possible lexeme. For example, the token for the arithmetic operator symbol + has just one possible lexeme.

In general, languages can be formally defined in two distinct ways: by recognition and by generation (although neither provides a definition that is practical by itself for people trying to learn or use a programming language). A recognition device reads input strings over the alphabet of the language and decides whether the input strings belong to the language

A language generator is a device that can be used to generate the sentences of a language. We can think of the generator as having a button that produces a sentence of the language every time it is pushed. Because the particular sentence that is produced by a generator when its button is pushed is unpredictable, a generator seems to be a device of limited usefulness as a language descriptor.

Formal Methods of Describing Syntax

- Context-Free Grammars

–Developed by Noam Chomsky in the mid-1950s

–Language generators, meant to describe the syntax of natural languages

–Define a class of languages called context-free languages

- Backus-Naur Form (1959)

–Invented by John Backus to describe the syntax of Algol 58

–BNF is equivalent to context-free grammars

- BNF Fundamentals

– In BNF, abstractions are used to represent classes of syntactic structures–they act like syntactic variables (also called nonterminal symbols, or just terminals)

– Terminals are lexemes or tokens

– A rule has a left-hand side (LHS), which is a nonterminal, and a right-hand side (RHS), which is a string of terminals and/or nonterminals

-Nonterminals are often enclosed in angle brackets

-Examples of BNF rules:

– <ident_list> → identifier | identifier, <ident_list>

– <if_stmt> → if <logic_expr> then <stmt>

-Grammar: a finite non-empty set of rules

-A start symbol is a special element of the nonterminals of a grammar

A grammar is a generative device for defining languages. The sentences of the language are generated through a sequence of applications of the rules, beginning with a special nonterminal of the grammar called the start symbol. This sequence of rule applications is called a derivation. In a grammar for a complete programming language, the start symbol represents a complete program and is often named.

Every string of symbols in a derivation is a sentential form. A sentence is a sentential form that has only terminal symbols. A leftmost derivation is one in which the leftmost nonterminal in each sentential form is the one that is expanded and a derivation may be neither leftmost nor rightmost.

A grammar is ambiguous if and only if it generates a sentential form that has two or more distinct parse trees. If we use the parse tree to indicate precedence levels of the operators, we cannot have ambiguity.

Attribute Grammars

An attribute grammar is a device used to describe more of the structure of a programming language than can be described with a context-free grammar. An attribute grammar is an extension to a context-free grammar. The extension allows certain language rules to be conveniently described, such as type compatibility. Before we formally define the form of attribute grammars, we must clarify the concept of static semantics. There are some characteristics of the structure of programming languages that are difficult to describe with BNF, and some that are impossible. As an example of a syntax rule that is difficult to specify with BNF, consider type compatibility rules. In Java, for example, a floating-point value cannot be assigned to an integer type variable, although the opposite is legal. Although this restriction can be specified in BNF, it requires additional nonterminal symbols and rules. If all of the typing rules of Java were specified in BNF, the grammar would become too large to be useful, because the size of the grammar determines the size of the syntax analyzer. As an example of a syntax rule that cannot be specified in BNF, consider the common rule that all variables must be declared before they are referenced. It has been proven that this rule cannot be specified in BNF. Attribute grammars are context-free grammars to which have been added attributes, attribute computation functions, and predicate functions. Attributes, which are associated with grammar symbols (the terminal and nonterminal symbols), are similar to variables in the sense that they can have values assigned to them. Attribute computation functions, sometimes called semantic functions, are associated with grammar rules. They are used to specify how attribute values are computed. Predicate functions, which state the static semantic rules of the language, are associated with grammar rules.

Semantics

There is no single widely acceptable notation or formalism for describing semantics

Several needs for a methodology and notation for semantics:

- Programmers need to know what statements mean

- Compiler writers must know exactly what language constructs do

- Correctness proofs would be possible

- Compiler generators would be possible

- Designers could detect ambiguities and inconsistencies

The idea behind operational semantics is to describe the meaning of a statement or program by specifying the effects of running it on a machine. The effects on the machine are viewed as the sequence of changes in its state, where the machine’s state is the collection of the values in its storage. An obvious operational semantics description, then, is given by executing a compiled version of the program on a computer. Most programmers have, on at least one occasion, written a small test program to determine the meaning of some programming language construct, often while learning the language. Essentially, what such a programmer is doing is using operational semantics to determine the meaning of the construct. There are several problems with using this approach for complete formal semantics descriptions. First, the individual steps in the execution of machine language and the resulting changes to the state of the machine are too small and too numerous. Second, the storage of a real computer is too large and complex. There are usually several levels of memory devices, as well as connections to enumerable other computers and memory devices through networks. Therefore, machine languages and real computers are not used for formal operational semantics. Rather, intermediate-level languages and interpreters for idealized computers are designed specifically for the process. There are different levels of uses of operational semantics. At the highest level, the interest is in the final result of the execution of a complete program. This is sometimes called natural operational semantics. At the lowest level, operational semantics can be used to determine the precise meaning of a program through an examination of the complete sequence of state changes that occur when the program is executed. This use is sometimes called structural operational semantics.

Denotational semantics is the most rigorous and most widely known formal method for describing the meaning of programs. It is solidly based on recursive function theory. A thorough discussion of the use of denotational semantics to describe the semantics of programming languages is necessarily long and complex.

In Axiomatic semantics, there is no model of the state of a machine or program or model of state changes that take place when the program is executed. The meaning of a program is based on relationships among program variables and constants, which are the same for every execution of the program. Axiomatic semantics has two distinct applications: program verification and program semantics specification. Axiomatic semantics based on mathematical logic.

No Comments »

RSS feed for comments on this post. TrackBack URL